The beauty of Stochastic Gradient Descent (SGD)

Stochastic Gradient Descent (SGD) stands out as the go-to method for tackling optimization problems that involve the summation of individual functions. Its key strength lies in its ability to swiftly converge towards an optimal solution, contingent upon the chosen step size. This approach offers a rapid yet effective means of obtaining estimations that prove sufficiently accurate for practical predictive purposes.

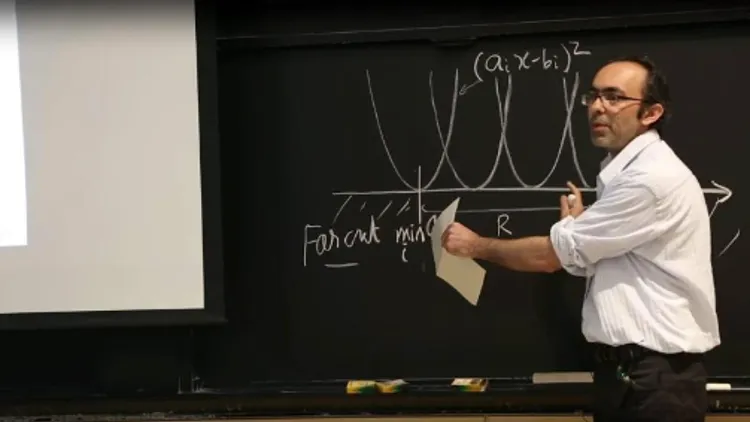

Furthermore, SGD’s efficiency shines through in its ability to significantly reduce the computational workload required for convergence. As a result, it finds extensive utility in the development of prediction models for vast datasets, where ‘n’ extends into the millions, and the dimensionality ‘d’ may also reach the millions, as is often the case with high-definition images. In this video, the knowledgeable professor Suvrit Sra elucidates the remarkable power of SGD in an engaging and instructive manner.

View the lecture at https://youtube.com/watch?v=k3AiUhwHQ28&si=gbACB95JYU7SYlQm